How To Read This Book

Welcome to Logic for Systems! Here are some quick hints that will help you use this book effectively.

This book is a draft, and there are some sections that are currently being filled in. If you want to use these materials and need support (e.g., you want to use the Forge homeworks that go with it, or a specific section you need is incomplete), please contact Tim_Nelson@brown.edu.

Organization

The book is organized into a series of short sections, each of which are grouped into chapters:

- Chapter 1 (Beyond Testing) briefly motivates the content in this book and sets the stage with a new technique for testing your software.

- Chapter 2 (Modeling Static Scenarios) provides an introduction to modeling systems in Forge by focusing on systems that don't change over time.

- Chapter 3 (Discrete Event Systems) shows a common way to model the state of a system changing over time.

- Chapter 4 (Modeling Relationships) enriches the modeling language to support arbitrary relations between objects in the world.

- Chapter 5 (Temporal Specification) covers temporal operators, which are commonly used in industrial modeling and specification, and how to use them.

- Chapter 6 (Case Studies) touches on some larger applications of lightweight formal methods. Some of these will involve large models written in Forge, and others will lean more heavily on industrial systems.

- The Forge Documentation, which covers the syntax of the language more concisely and isn't focused on teaching.

Each chapter contains a variety of examples: data structures, puzzles, algorithms, hardware concepts, etc. We hope that the diversity of domains covered means that everyone will see an example that resonates with them. Full language and tool documentation come after the main body of the book.

What does this book assume? What is its goal?

This book does not assume any prior background with formal methods or even discrete math. It does assume the reader has written programs before at the level of an introductory college course.

The goal of this chapter progression is to prepare the reader to formally model and reason about a domain of their own choosing in Forge (or perhaps in a related tool, such as an SMT solver).

With that in mind...

Do More Than Read

This book is example driven, and the examples are almost always built up from the beginning. The flow of the examples is deliberate, and might even take a "wrong turn" that is meant to teach a specific lesson before changing direction. If you try to read the book passively, you're likely to be very disappointed. Worse, you may not actually be able to do much with the material after reading.

Instead, follow along, pasting each snippet of code or Forge model into the appropriate tool, and try it! Better yet, try modifying it and see what happens. You'll get much more out of each section as a result. Forge especially is designed to aid experimentation. Let your motto be:

Navigating the Book Site

With JavaScript enabled, the table of contents (to the left, by default) will allow you to select a specific section of this book. Likewise, the search bar (enabled via the "Toggle Searchbar" icon) should allow you to search for arbitrary alphanumeric phrases in the full text; unfortunately, non alphanumeric operators are not supported by search at present.

The three buttons for popping out the table of contents, changing the color theme, and searching are in the upper-left corner of this page, by default. If you do not see them, please ensure that JavaScript is enabled.

The table of contents for the Forge documentation is expandable. Once it is open, click the ❱ icons to expand individual sections and subsections to browse more easily!

To change the color theme of the page, click this button:

To search, click this button:

Callout Boxes

Callout boxes can give valuable warnings, helpful hints, and other supplemental information. They are color- and symbol-coded depending on the type of information. For example:

If you see a callout labeled "CSCI 1710", it means that it's specifically for students in Brown University's CSCI 1710 course, Logic for Systems.

Exercises

Every now and then, you'll find question prompts, followed by a clickable header that looks like this:

Think, then click!

SPOILER TEXT

If you click the arrow, it will expand to show hidden text, often revealing an answer or some other piece of information that is meant to be read after you've thought about the question. When you see these exercises, don't skip past them, and don't just read the hidden text.

Our Value Proposition

Everybody has endless demands on their time. If you're a student, you might be deciding which classes to take. There's never enough time to take them all, so you need to prioritize based on expected value. If you're a professional, you're deciding how to best use your limited "free" time to learn new skills and stay current. Either way, you're probably wondering: What good is this book? (And if you aren't asking that, you ought to be.)

You need many different skills for a successful career. This book won't teach you how to work with other people, or manage your tasks, or give and receive feedback. It won't teach you to program either; there are plenty of other books for that. Instead, this book will teach you:

- how to think more richly about what matters about a system;

- how to better express what you want from it;

- how to more thoroughly evaluate what a system actually does give you; and

- how to use constraints and constraint solvers in your work (because we'll use them as tools to help us out). It will also give you a set of baseline skills that will aid you in using any further formal-methods techniques you might encounter in your work, such as advanced type systems, program verification, theorem proving, and more.

Modeling: What really matters?

There's a useful maxim by George Box: "All models are wrong, but some are useful". The only completely accurate model of a system is that system itself, including all of its real external context. This is impractical; instead, a modeler needs to make choices about what really matters to them: what do you keep, and what do you disregard? Done well, a model gets at the essence of a system. Done poorly, a model yields nothing useful or, worse, gives a false sense of security.

I suspect that people were saying "All models are wrong" long before Box did! But it's worth reading this quote of his from 1978, and thinking about the implications.

Now it would be very remarkable if any system existing in the real world could be exactly represented by any simple model. However, cunningly chosen parsimonious models often do provide remarkably useful approximations. For example, the law relating pressure , volume and temperature of an "ideal" gas via a constant is not exactly true for any real gas, but it frequently provides a useful approximation and furthermore its structure is informative since it springs from a physical view of the behavior of gas molecules. For such a model there is no need to ask the question "Is the model true?". If "truth" is to be the "whole truth" the answer must be "No". The only question of interest is "Is the model illuminating and useful?".

(Bolding mine.) TODO: check quote text, Wiki link does not lead to a readable paper.

If you want to do software (or hardware) engineering, some amount of modeling is unavoidable. Here are two basic examples of many.

Data Models Everywhere

You might already have benefitted from a good model (or suffered from a poor one) in your programming work. Whenever you write data definitions or class declarations in a program, you're modeling. The ground truth of the data is rarely identical to its representation. You decide on a particular way that it should be stored, transformed, and accessed. You say how one piece of data relates to another.

Your data-modeling choices affect more than just execution speed: if a pizza order can't have a delivery address that is separate from the user's billing address, important user needs will be neglected. On the other hand, it is probably OK to leave the choice of cardboard box out of the user-facing order. An order has a delivery time, which probably comes with a time zone. You could model the time zone as an integer offset from UTC, but this is a very bad idea. And, since there are 24 hours in a day, the real world imposes range limits: a timezone that's a million hours ahead of UTC is probably a buggy value, even though the value 1000000 is much smaller than even a signed 32-bit int can represent.

Data vs. Its Representation

The level of abstraction matters, too. Suppose that your app scans handwritten orders. Then handwriting becomes pixels, which are converted into an instance of your data model, which is implemented as bytes, which are stored in hardware flip-flops and so on. You probably don't need, or want, to keep all those perspectives in mind simultaneously. Languages are valuable in part because of the abstractions they foster, even if those abstractions are incomplete—they can be usefully incomplete! What matters is whether the abstraction level suits your needs, and your users'.

I learned to program in the 1990s, when practitioners were at odds over automated vs. manual memory management. It was often claimed that a programmer needed to really understand what was happening at the hardware level, and manually control memory allocation and deallocation for the sake of performance. Most of us don't think that anymore, unless we need to! Sometimes we do; often we don't. Focus your attention on what matters for the task at hand.

The examples don't stop: In security, a threat model says what powers an attacker has. In robotics and AI, reinforcement learning works over a probabilistic model of real space. And so on. The key is: what matters for your needs? Box had something to say about that, too:

Since all models are wrong the scientist must be alert to what is importantly wrong. It is inappropriate to be concerned about safety from mice when there are tigers abroad.

TODO: add citation

Specification: What do you want?

Suppose that I want to store date-and-time values in a computer program. That's easy enough to say, right? But the devil is in the details: What is the layout of the data? Which fields will be stored, and which will be omitted? Which values are valid, and which are out of bounds? Is the format efficiently serializable? How far backward in time should the format extend, and how far into the future should it reach?

And which calendar are we using, anyway?

If our programs are meant to work with dates prior to the 1600's, only their historical context can say whether they should be interpreted with the Gregorian calendar or the Julian calendar. And that's just two (Eurocentric) possibilities!

If you're just building a food delivery app, you probably only need to think about some of these aspects of dates and times. If you're defining an international standard, you need to think about them all.

Either way, being able to think carefully about your specification can separate quiet success from famous failure.

Validation and Verification: Did you get what you wanted?

Whether you're working out an algorithm on paper or checking a finished implementation, you need some means of judging correctness. Here, too, precision (and a little bit of adversarial thinking) matters in industry:

- When ordinary testing isn't good enough, techniques like fuzzing, property-based testing, and others give you new evaluative power.

- When you're updating, refactoring, or optimizing a system, a model of its ideal behavior can be leveraged for validation. Here's an example from 2014—click through the header of the linked article to read the original blog post.)

- A model of the system's behavior is also useful for test-case generation, and enable tools to generate test suites that have a higher coverage of the potential state space.

And all that's even before we consider more heavyweight methods, like model checking and program verification.

Formalism Isn't Absolute

The word "formal" has accumulated some unfortunate connotations: pedantry, stuffiness, ivory-tower snootiness, being an architecture astronaut, etc. The truth is that formalism is a sliding scale. We can take what we need and leave the rest. What really matters is the ability to precisely express your goals, and the ability to take advantage of that precision.

In fact, formalism powers many software tools that help us to reason about the systems we create. In the next section, we'll start sketching what that means for us as engineers and humans.

Logic for Systems

Setting the Stage

If you're reading this book, you've probably had to complete some programming assignments—or at least written some small program for a course or an online tutorial. Take a moment to list a handful of such assignments: what did you have to build?

Now ask yourself:

- How did you know what behavior to implement?

- How did you know which data structures or algorithms were the right ones to use?

- How did you know your program "worked", in the end?

In the context of assignments, there are expected answers to these questions. For instance, you might say you know your code worked because you tested it (very thoroughly, I'm sure)! But is that really the truth? In terms of consequences, the true bar for excellence in a programming class is the grade you got. That is:

- You knew what to do because you were told what to do.

- You probably knew which algorithms to use because they'd just been taught to you.

- You were confident that your programs worked because you were told by an authority figure.

But outside that context, as (say) a professional engineer, you lose the safety net. You might be working on a program that controls the fate of billions of dollars, tempers geopolitical strife, or controls a patient's insulin pump. Even if you had a TA, would you trust them to tell you that those programs worked? Would you trust your boss to understand exactly what needed to happen, and tell you exactly how to do it? Probably not! Instead, you need to think carefully about what you want, how to build it, and how to evaluate what you and others have built.

As engineers, we strive for perfection. But perfection is an ideal; it's not obtainable. Why? First: we're human. Even if we could read our customers' minds, that's no guarantee that they know what they really need. And even if we can prove our code is correct, we might be checking for the wrong things. Second: our environment is hostile. Computers break. Patches to dependencies introduce errors. Cosmic radiation can flip bits in memory. 100% reliability is hopeless. Anyone who tells you differently is trying to sell you something.

But that doesn't mean we should give up. It just means that we should moderate our expectations. Instead of focusing on perfect correctness, instead try to increase your confidence in correctness.

Unit Testing

Hopefully we all agree that unit testing with concrete input-output pairs has its virtues and that we should keep doing it. But let's investigate what it does and doesn't do well.

Exercise: Make two lists: What does unit testing do well? What doesn't it do well? (Hint: Why do we test? What could go wrong, and how can the sort of testing you've done in other classes let us down?)

Think, then click!

You might have observed that (for most interesting programs, anyway) tests cannot be exhaustive because there are infinitely many possible inputs. And since we're forced to test non-exhaustively, we have to hope we pick good tests---tests that not only focus on our own implementation, but on others (like the implementation that replaces yours eventually) too.

Worse, we can't test the things we don't think of, or don't know about; we're vulnerable to our limited knowledge, the availability heuristic, confirmation bias, and so on. In fact, we humans are generally ill equipped for logical reasoning, even if trained.

Humans and Reasoning

A Toy Example

Suppose we're thinking about the workings of a small company. We're given some facts about the company, and have to answer a question based on those facts. Here's an example. We know that:

- Alice directly supervises Bob.

- Bob directly supervises Charlie.

- Alice graduated Brown.

- Charlie graduated Harvale.

To keep things simple, we'll assume that all three people graduated some university.

Exercise: Does someone who graduated from Brown directly supervise someone who graduated from another University?

Think, then click.

Yes! Regardless of whether Bob graduated from Brown, some Brown graduate supervises some non-Brown graduate. Reasoning by hypotheticals, there is one fact we don't know: where Bob graduated. In case he graduated Brown, he supervises Charlie, a non-Brown graduate. In case he graduated from another school, he's supervised by Alice, a Brown graduate.

Humans tend to be very bad at reasoning by hypotheticals. There's a temptation to think that this puzzle isn't solvable because we don't know where Bob graduated from. Even Tim thought this at first after seeing the puzzle—in grad school! For logic!

Now imagine a puzzle with a thousand of these unknowns. A thousand boolean variables means cases to reason through. Want to use a computer yet?

This isn't really about logic puzzles.

A Real Scenario

There's a real cryptographic protocol called the Needham-Schroeder public-key protocol. You can read about it here. Unfortunately the protocol has a bug: it's vulnerable to attack if one of the principles can be fooled into starting an exchange with a badly-behaved or compromised agent. We won't go into specifics. Instead, let's focus on the fact that it's quite easy to get things like protocols wrong, and sometimes challenging for us humans to completely explore all possible behaviors -- especially since there might be behaviors we'd never even considered! It sure would be nice if we could get a computer to help out with that.

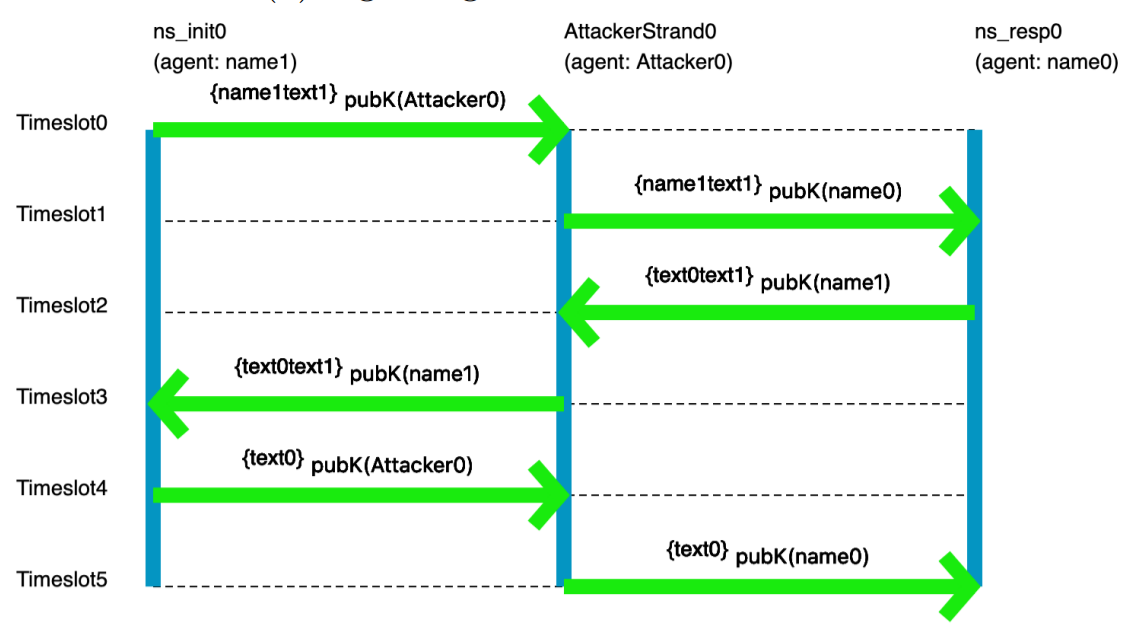

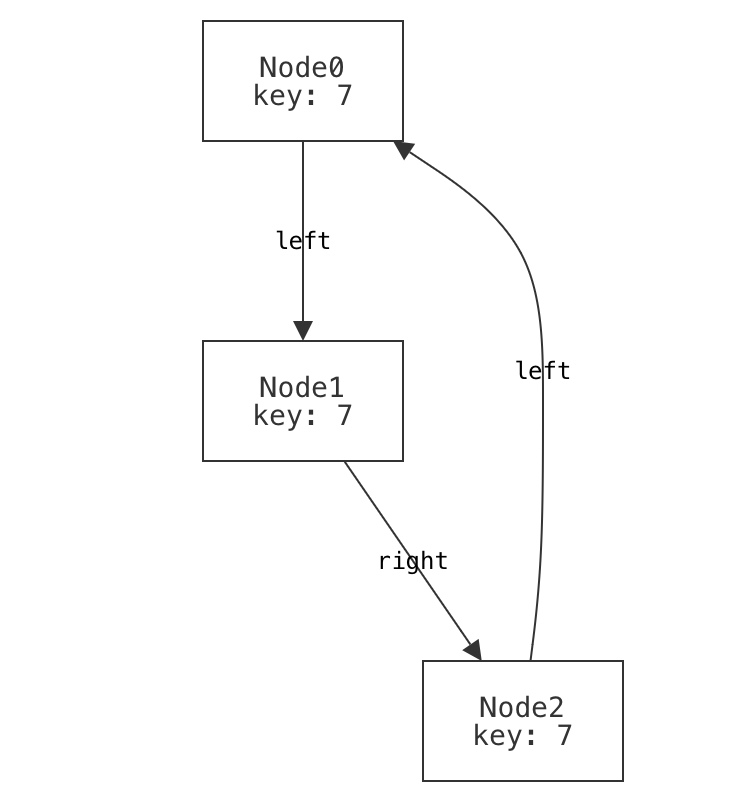

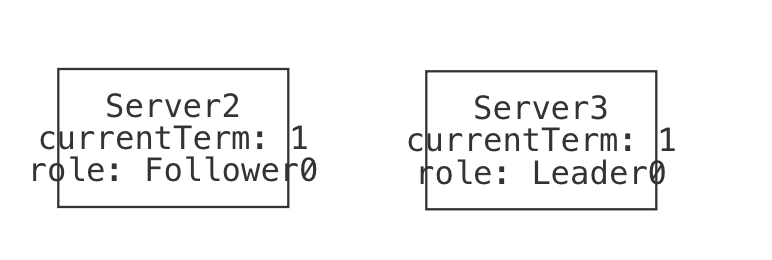

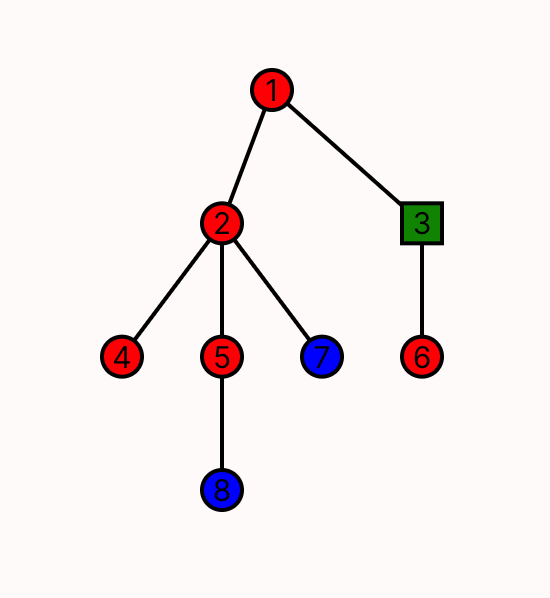

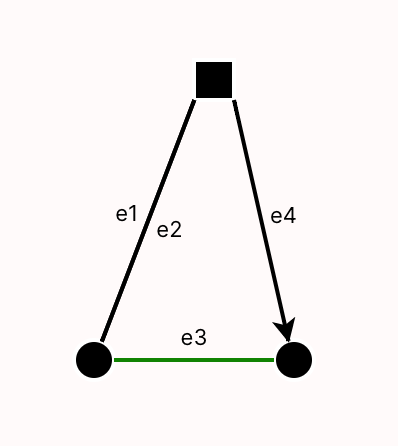

A pair of former 1710 students did an ISP on modeling crypto protocols, using the tools you'll learn in class. Here's an example picture, generated by their model, of the flaw in the Needham-Schroeder protocol:

You don't need to understand the specifics of the visualization; the point is that someone who has studied crypto protocols would. And this really does show the classic attack on Needham-Schroeder. You may not be a crypto-protocol person, but you probably are an expert in something subtle that you'd like to model, reason about, and understand better.

In fact, if you're reading this as part of your coursework for CSCI 1710, you will be expected to select, research, and model something yourself based on your interests. This is one of our main end-goals for the course.

Automated Reasoning as an Assistive Device

The human species has been so successful, in part, because of our ability to use assistive devices—tools! Eyeglasses, bicycles, hammers, bridges: all devices that assist us in navigating the world in our fragile meat-bodies. One of our oldest inventions, writing, is an assistive device that increases our long-term memory space and makes that memory persistent. Computers are only one in a long line of such inventions.

So, naturally, we've found ways to:

- use computers to help us test our ideas;

- use computers to exhaustively check program correctness;

- use computers to help us find gaps in our intuition about a program;

- use computers to help us explore the design space of a data structure, or algorithm, card game, or chemical reaction;

- etc.

There's a large body of work in Computer Science that uses logic to do all those things. We tend to call it formal methods, especially when the focus is on reasoning about systems. It touches on topics like system modeling, constraint solving, program analysis, design exploration, and more. That's what this book is about: the foundational knowledge to engage with many different applications of these ideas, even if you don't end up working with them directly every day.

More concretely, we'll focus on a class of techniques called lightweight formal methods, which are characterized by tradeoffs that favor ease of use over strong guarantees (although we'll sometimes achieve those as well).

Jeanette Wing and Daniel Jackson wrote a short article coining the term "lightweight FM" in the 90's, which you can find online.

When we say "systems" in this book we don't necessarily mean the kind of systems you see in a class on networks, hardware architecture, or operating systems. You can apply the techniques in this book to those subjects quite naturally, but you can also apply it to user interfaces, type systems in programming, hardware, version control systems like Git, web security, cryptographic protocols, robotics, puzzles, sports and games, and much more. So we construe the word "system" very broadly.

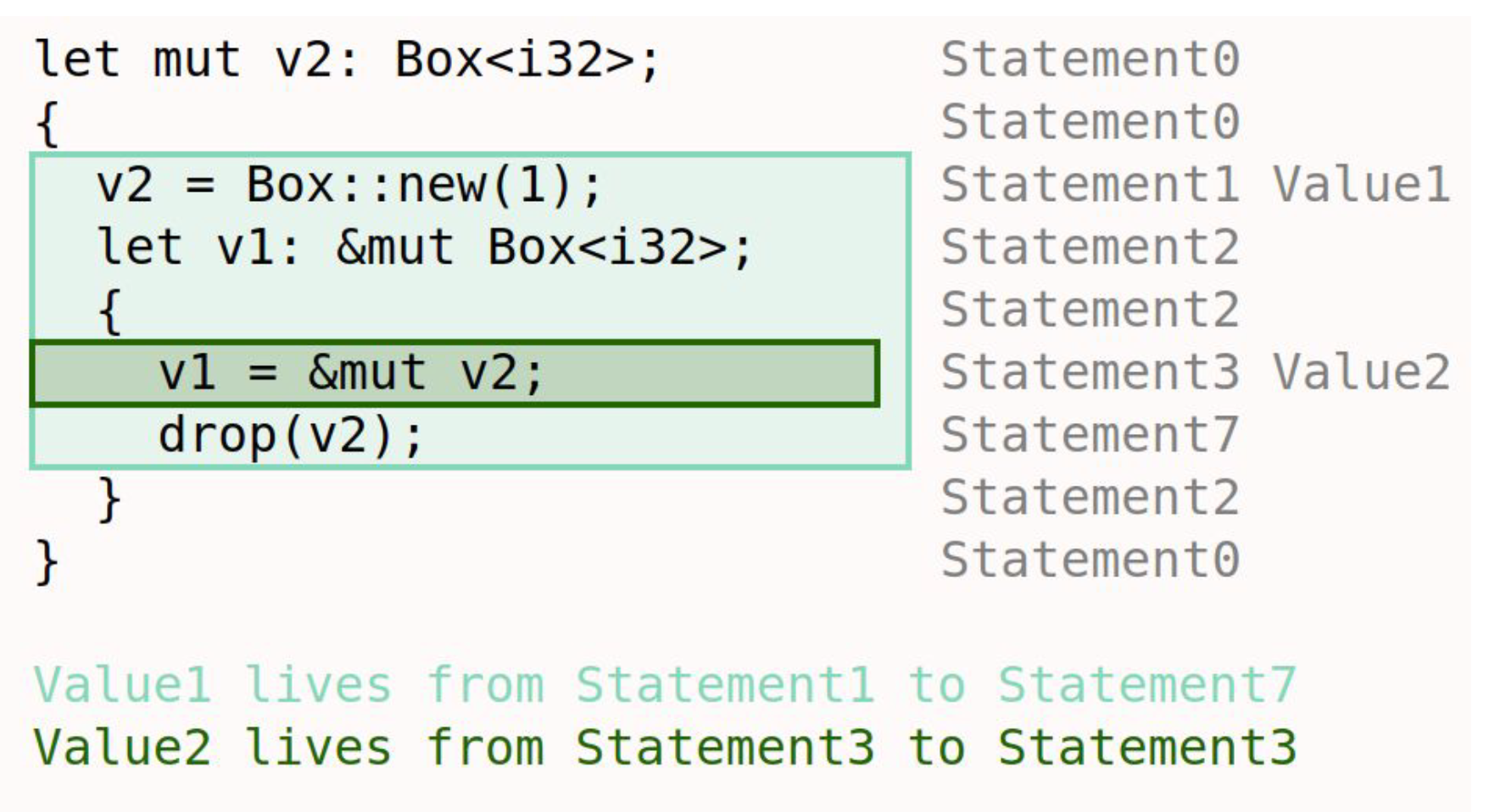

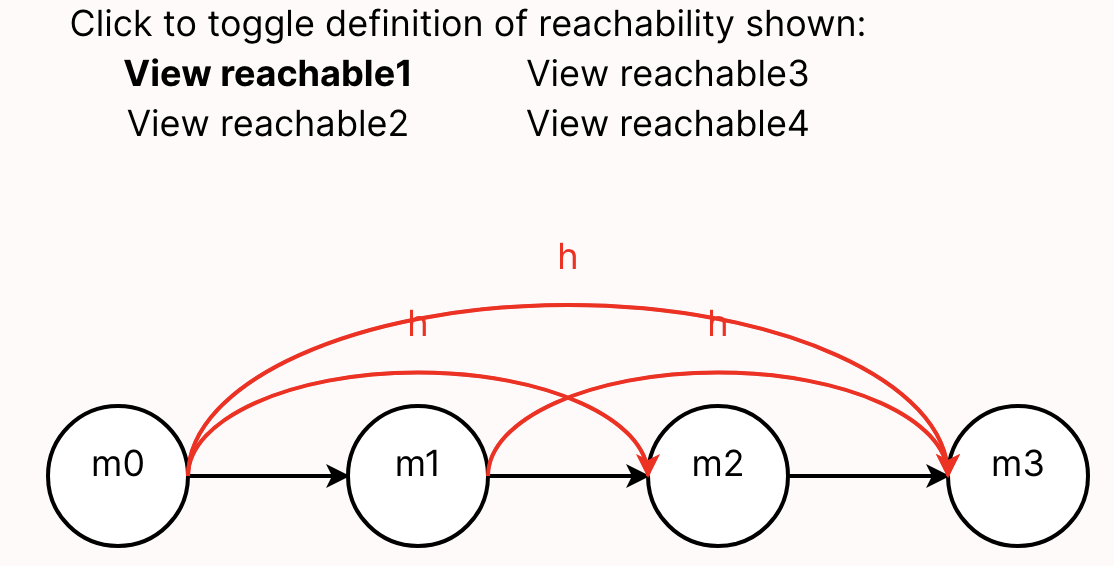

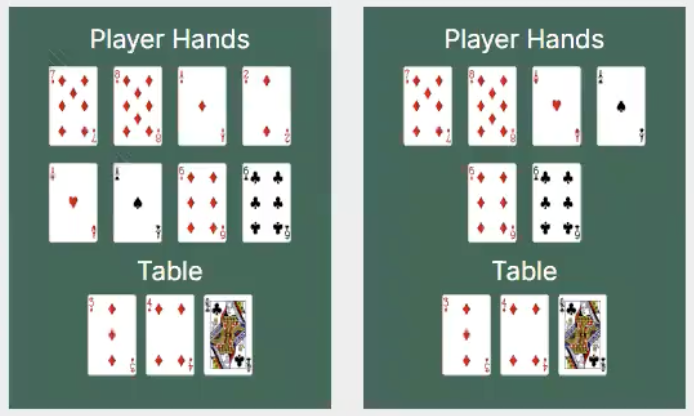

Here are some examples of "systems" that students have modeled in Forge: lifetimes in Rust, network reachability, and poker!

The Future of Computing

For better or worse, The shape of engineering is changing. Lots of people are excited, scared, or both about large language models like ChatGPT. This book won't teach you how to use generative AI, so it's reasonable to wonder: why learn from this book, instead of reading yet another book on another (deservedly) hot topic, like machine learning?

There are two questions that will never go out of style, and won't be answered by AI (at least, not in our lifetimes):

- What do you want to build? What does your customer really need? Answering this requires talking to them and other stakeholders, watching their processes, seeking their feedback, and adjusting your design based on it. And no matter who (or what) is writing the actual code, you need to be able to express all this precisely enough that they (or it) can succeed at the implementation.

- How will you evaluate what you get? No matter who (or what) is building the system, verification is needed before the system can be trusted.

Even setting aside the customer-facing aspects, we'll still need to think critically about what it is we want and how to evaluate whether we're getting it. The skills you learn here will remain useful (or become even more so) as engineering evolves. In the next chapter, we'll try to convince you that these skills will be useful for more than just code.

"Formal Methods"

Formal methods (FM) are ways to help you think carefully about a domain, process, or system. They use math-based techniques (which are usually implemented in tools) to help. They aren't an academic exercise; they are used widely in industry and have likely saved billions of dollars and thousands of lives.

Some industrial examples I'm fond of include:

- Amazon Web Services' Zelkova, which helps administrators author better security policies for their services. This book will give you the tools to build a policy-analysis system like Zelkova yourself.

- Microsoft's static driver verifier, which helps increase the reliability of low-level device drivers in Windows. While this book doesn't cover the techniques they used, I love to showcase this work (which helped Microsoft ship more stable drivers and, at this point, is now decades old).

- MongoDB's work on modeling replication, which found a real bug in their code. Quoting the linked page: "We've never encountered this issue in testing or in the field and only found it by reasoning about the edge cases. This shows writing and model checking ... specs is an excellent alternative way to find and verify edge cases." (Ellipsis mine.) We won't use this exact tool, but we'll cover other model checkers in this book.

We can find real applications for FM outside Computer Science too---even the law. Here's an article about the value of modeling legal concepts to find loopholes in the law. This is the sort of FM we'll be learning how to do in 1710.

This Github repository keeps a (reasonably up to date, but not exhaustive!) list of other industrial applications of formal methods. Check it out!

Exercise

Can you think of one or two domains, systems, or processes that especially interest you? Think about the kinds of "system" you interact with regularly or have learned about in your life. What would you like to understand better about those systems?

Remember that we construe the word system broadly. A cryptographic protocol is a system, but so is the game of baseball. A data structure is a system, but so are chemical reactions.

Looking Ahead: Tools

The main tool we'll use in this book is Forge, a tool for modeling systems. In the course of the book, we'll be progressing through three language levels in Forge:

- Froglet, which restricts the set of operations so that we can jump right in more easily. If you have intuitions about object-oriented programming, those intuitions will be useful in Froglet, although there are a few important differences that we'll talk about.

- Relational Forge, which expands the set of operations available to include sets, relations, and relational operators. These are useful for reasoning about complex relationships between objects and for representing certain domains, like databases or graphs.

- Temporal Forge, which helps us cleanly model how a system's state evolves over time. Temporal Forge is based on the industry-standard specification language LTL—Linear Temporal Logic.

We'll also use some other tools, like:

- Hypothesis, a testing library for Python; and

- Z3, an SMT solver library.

From Tests to Properties

We'll talk about more than just software soon. For now, let's go back to testing. Most of us have learned how to write test cases. Given an input, here's the output to expect. Tests are a kind of pointwise specification; a partial one, and not great for fully describing what you want, but a kind of specification nonetheless. They're cheap, non-trivially useful, and better than nothing.

But they also carry our biases, they can't cover an infinite input space, etc. Even more, they're not always adequate carriers of intent: if I am writing a program to compute the statistical median of a dataset, and write assert median([1,2,3]) == 2, what exactly is the behavior of the system I'm trying to confirm? Surely I'm not writing the test because I care specifically about [1,2,3] only, and not about [3,4,5] in the same way? Maybe there was some broader aspect, some property of median I cared about when I wrote that test.

Exercise: What do you think it was? What makes an implementation of median correct?

Think, then click!

There might be many things! One particular idea is that, if the input list has odd length, the median needs to be an element of the list. Or that, once the set is sorted, the median should be the "middle" element.

There isn't always an easy-to-extract property for every unit test. But this idea—encoding goals instead of specific behaviors—forces us to start thinking critically about what exactly we want from a system and helps us to express it in a way that others (including, perhaps, LLMs) can better use. It's only a short hop from there to some of the real applications we talked about last time, like verifying firewalls or modeling the Java type system.

Sometimes the input space is small enough that exhaustive testing works well. This blog post, entitled "There are only four billion floats" is an example.

Depending on your experience, this may also be a different kind from testing from what you're used to. Building a repertoire of different tools is essential for any engineer!

A New Kind of Testing

Cheapest Paths

Consider the problem of finding cheapest paths in a weighted graph. There are quite a few algorithms you might use: Dijkstra, Bellman-Ford, even a plain breadth-first search for an unweighted graph. You might have implemented one of these for another class!

The problem statement seems simple: take a graph and two vertex names and as input. Produce the cheapest path from to in . But it turns out that this problem hides a lurking issue.

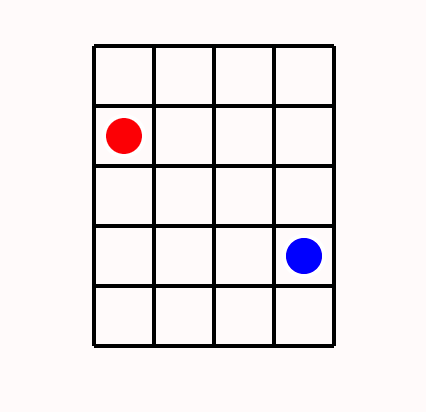

Exercise: Find the cheapest path from vertex to vertex on the graph below.

Think, then click!

The path is G to A to B to E.Great! We have the answer. Now we can go and add a test case for with that graph as input and (G, A, B, E) as the output.

Wait -- you found a different path? G to D to B to E?

And another path? G to H to F to E?

If we add a traditional test case corresponding to one of the correct answers, our test suite will falsely raise alarms for correct implementations that happen to find different answers. In short, we'll be over-fitting our tests to @italic{one specific implementation}: ours. But there's a fix. Maybe instead of writing:

shortest(GRAPH, G, E) == [(G, A), (A, B), (B, E)]

we write:

shortest(GRAPH, G, E) == [(G, A), (A, B), (B, E)] or

shortest(GRAPH, G, E) == [(G, D), (D, B), (B, E)] or

shortest(GRAPH, G, E) == [(G, H), (H, F), (F, E)]

Exercise: What's wrong with the "big or" strategy? Can you think of a graph where it'd be unwise to try to do this?

Think, then click!

There are at least two problems. First, we might have missed some possible solutions, which is quite easy to do; the first time Tim was preparing these notes, he missed the third path above! Second, there might be an unmanageable number of equally correct solutions. The most pathological case might be something like a graph with all possible edges present, all of which have weight zero. Then, every path is cheapest.

This problem -- multiple correct answers -- occurs in every part of Computer Science. Once you're looking for it, you can't stop seeing it. Most graph problems exhibit it. Worse, so do most optimization problems. Unique solutions are convenient, but the universe isn't built for our convenience.

Exercise: What's the solution? If test cases won't work, is there an alternative? (Hint: instead of defining correctness bottom-up, by small test cases, think top-down: can we say what it means for an implementation to be correct, at a high level?)

Think, then click!

In the cheapest-path case, we can notice that the costs of all cheapest paths are the same. This enables us to write:

cost(cheapest(GRAPH, G, E)) = 11

which is now robust against multiple implementations of cheapest.

This might be something you were taught to do when implementing cheapest-path algorithms, or it might be something you did on your own, unconsciously. (You might also have been told to ignore this problem, or not told about it at all...) We're not going to stop there, however.

Notice that we just did something subtle and interesting. Even if there are a billion cheapest paths between two vertices in the input graph, they all have that same, minimal length. Our testing strategy has just evolved past naming specific values of output to checking broader properties of output.

Similarly, we can move past specific inputs: randomly generate them. Then, write a function is_valid that takes an arbitrary input, output pair and returns true if and only if the output is a valid solution for the input. Just pipe in a bunch of inputs, and the function will try them all. You can apply this strategy to most any problem, in any programming language. (For your homework this week, you'll be using Python.) Let's be more careful, though.

Exercise: Is there something else that cheapest needs to guarantee for that input, beyond finding a path with the same cost as our solution?

Think, then click!

We also need to confirm that the path returned by cheapest is indeed a path in the graph!

Exercise: Now take that list of goals, and see if you can outline a function that tests for it. Remember that the function should take the problem input (in this case, a graph and the source and destination vertices) and the output (in this case, a path). You might generate something like this pseudocode:

Think, then click!

isValid : input: (graph, vertex, vertex), output: list(vertex) -> bool

returns true IFF:

(1) output.cost == trustedImplementation(input).cost

(2) every vertex in output is in input's graph

(3) every step in output is an edge in input

... and so on ...

This style of testing is called Property-Based Testing (PBT). When we're using a trusted implementation—or some other artifact—to either evaluate the output or to help generate useful inputs, it is also a variety of Model-Based Testing (MBT).

There's a lot of techniques under the umbrella of MBT. A model can be another program, a formal specification, or some other type of artifact that we can "run". Often, MBT is used in a more stateful way: to generate sequences of user interactions that drive the system into interesting states.

For now, know that modeling systems can be helpful in generating good tests, in addition to everything else.

There are a few questions, though...

Question: Can we really trust a "trusted" implementation?

No, not completely. It's impossible to reach a hundred percent trust; anybody who tells you otherwise is selling something. Even if you spend years creating a correct-by-construction system, there could be a bug in (say) how it is deployed or connected to other systems.

But often, questions of correctness are really about the transfer of confidence: my old, slow implementation has worked for a couple of years now, and it's probably mostly right. I don't trust my new, optimized implementation at all: maybe it uses an obscure data structure, or a language I'm not familiar with, or maybe I don't even have access to the source code at all.

And anyway, often we don't need recourse to any trusted model; we can just phrase the properties directly.

Exercise: What if we don't have a trusted implementation?

Think, then click!

You can use this approach whenever you can write a function that checks the correctness of a given output. It doesn't need to use an existing implementation (it's just easier to talk about that way). In the next example we won't use a trusted implementation at all!

Input Generation

Now you might wonder: Where do the inputs come from?

Great question! Some we will manually create based on our own cleverness and understanding of the problem. Others, we'll generate randomly.

Random inputs are used for many purposes in software engineering: "fuzz testing", for instance, creates vast quantities of random inputs in an attempt to find crashes and other serious errors. We'll use that same idea here, except that our notion of correctness is usually a bit more nuanced.

Concretely:

It's important to note that some creativity is still involved here: you need to come up with an is_valid function (the "property"), and you'll almost always want to create some hand-crafted inputs (don't trust a random generator to find the subtle corner cases you already know about!) The strength of this approach lies in its resilience against problems with multiple correct answers, and in its ability to mine for bugs while you sleep. Did your random testing find a bug? Fix it, and then add that input to your list of regression tests. Rinse, repeat.

If we were still thinking in terms of traditional test cases, this would make no sense: where would the outputs come from? Instead, we've created a testing system where concrete outputs aren't something we need to provide. Instead, we check whether the program under test produces any valid output.

The Hypothesis Library

There are PBT libraries for most every popular language. In this book, we'll be using a library for Python called Hypothesis. Hypothesis has many helper functions to make generating random inputs relatively easy. It's worth spending a little time stepping through the library. Let's test a function in Python itself: the median function in the statistics library, which we began this chapter with. What are some important properties of median?

If you're in CSCI 1710, your first homework starts by asking you to generate code using an LLM of your choice, such as ChatGPT. Then, you'll use property-based testing to assess its correctness. To be clear, you will not be graded on the correctness of the code you prompt an LLM to generate. Rather, you will be graded on how good your property-based testing is.

Later in the semester, you'll be using PBT again to test more complex software!

Now let's use Hypothesis to test at least one of those properties. We'll start with this template:

from hypothesis import given, settings

from hypothesis.strategies import integers, lists

from statistics import median

# Tell Hypothesis: inputs for the following function are non-empty lists of integers

@given(lists(integers(), min_size=1))

# Tell Hypothesis: run up to 500 random inputs

@settings(max_examples=500)

def test_python_median(input_list):

pass

# Because of how Python's imports work, this if statement is needed to prevent

# the test function from running whenever a module imports this one. This is a

# common feature in Python modules that are meant to be run as scripts.

if __name__ == "__main__": # ...if this is the main module, then...

test_python_median()

Let's start by filling in the shape of the property-based test case:

def test_python_median(input_list):

output_median = median(input_list) # call the implementation under test

print(f'{input_list} -> {output_median}') # for debugging our property function

if len(input_list) % 2 == 1:

assert output_median in input_list

# The above checks a conditional property. But what if the list length isn't even?

# We should be able to do better!

Exercise: Take a moment to try to express what it means for median to be correct in the language of your choice. Then continue on with reading this section.

Expressing properties can often be challenging. After some back and forth, we might reach a candidate function like this:

def test_python_median(input_list):

output_median = median(input_list)

print(f'{input_list} -> {output_median}')

if len(input_list) % 2 == 1:

assert output_median in input_list

lower_or_eq = [val for val in input_list if val <= output_median]

higher_or_eq = [val for val in input_list if val >= output_median]

assert len(lower_or_eq) >= len(input_list) // 2 # int division, drops decimal part

assert len(higher_or_eq) >= len(input_list) // 2 # int division, drops decimal part

Unfortunately, there's a problem with this solution. Python's median implementation fails this test! Hypothesis provides a random input on which the function fails: input_list=[9502318016360823, 9502318016360823]. Give it a try! This is what my computer produced; what happens on yours?

Exercise: What do you think is going wrong?

Think, then click!

Here's what my Python console reports:

>>> statistics.median([9502318016360823, 9502318016360823])

9502318016360824.0

I really don't like seeing a number that's larger than both numbers in the input set. But I'm also suspicious of that trailing .0. median has returned a float, not an int. That might matter. But first, we'll try the computation that we might expect median to run:

>>> (9502318016360823*2)/2

9502318016360824.0

What if we force Python to perform integer division?

>>> (9502318016360823*2)//2

9502318016360823

Could this be a floating-point imprecision problem? Let's see if Hypothesis can find another failing input where the values are smaller. We'll change the generator to produce only small numbers, and increase the number of trials hundredfold:

@given(lists(integers(min_value=-1000,max_value=1000), min_size=1))

@settings(max_examples=50000)

No error manifests. That doesn't mean one couldn't, but it sure looks like large numbers make the chance of an error much higher.

The issue is: because Python's statistics.median returns a float, we've inadvertently been testing the accuracy of Python's primitive floating-point division, and floating-point division is known to be imprecise in some cases. It might even manifest differently on different hardware—this is only what happens on my laptop!

Anyway, we have two or three potential fixes:

- bound the range of potential input values when we test;

- check equality within some small amount of error you're willing to tolerate (a common trick when writing tests about

floatvalues); or - change libraries to one that uses an arbitrary-precision, like BigNumber. We could adapt our test fairly easily to that setting, and we'd expect this problem to not occur.

Which is best? I don't really like the idea of arbitrarily limiting the range of input values here, because picking a range would require me to understand the floating-point arithmetic specification a lot more than I do. For instance, how do I know that there exists some number before which this issue can't manifest? How do I know that all processor architectures would produce the same thing?

Between the other two options (adding an error term and changing libraries) it depends on the engineering context we're working in. Changing libraries may have consequences for performance or system design. Testing equality within some small window may be the best option in this case, where we know that many inputs will involve float division.

Takeaways

We'll close this section by noticing two things:

First, being precise about what correctness means is powerful. With ordinary unit tests, we're able to think about behavior only point-wise. Here, we need to broadly describe our goals, and tere's a cost to that, but also advantages: comprehensibility, more powerful testing, better coverage, etc. And we can still get value from a partial definition, because we can then at least apply PBT to that portion of the program's behavior.

Second, the very act of trying to precisely express, and test, correctness for median taught us (or reminded us about) something subtle about how our programming language works, which tightened our definition of correctness. Modeling often leads to such a virtuous cycle.

Intro to Modeling Systems (Part 1: Tic-Tac-Toe)

What's a Model?

A model is a representation of a system that faithfully includes some but not all of the system's complexity. There are many different ways to model a system, all of which have different advantages and disadvantages. Think about what a car company does before it produces a new car design. Among other things, it creates multiple models. E.g.,

- it models the car in some computer-aided design tool; and then

- creates a physical model of the car, perhaps with clay, for testing in wind tunnels etc.

There may be many different models of a system, all of them focused on something different. As the statisticians say, "all models are wrong, but some models are useful". Learning how to model a system is a key skill for engineers, not just within "formal methods". Abstraction is one of the key tools in Computer Science, and modeling lies at the heart of abstraction.

In this course, the models we build aren't inert; we have tools that we can use the explore and analyze them!

Don't Be Afraid of Imperfect Representations

We don't need to fully model a system to be able to make useful inferences. We can simplify, omit, and abstract concepts/attributes to make models that approximate the system while preserving the fundamentals that we're interested in.

Exercise: If you've studied physics, there's a great example of this in statics and dynamics. Suppose I drop a coin from the top of the science library, and ask you what its velocity will be when it hits the ground. Using the methods you learn in beginning physics, what's something you usefully disregard?

Think, then click!

Air resistance! Friction! We can still get a reasonable approximation for many problems without needing to include that. (And advanced physics adds even more factors that aren't worth considering at this scale.) The model without friction is often enough.

What is a "System"? (Models vs. Implementations)

When we say "systems" in this book, we mean the term broadly. A distributed system (like replication in MongoDB) is a system, but so are user interfaces and hardware devices like CPUs and insulin pumps. Git is a system for version control. The web stack, cryptographic protocols, chemical reactions, the rules of sports and games—these are all systems too!

To help build intuition, let's work with a simple system: the game of tic-tac-toe (also called noughts and crosses). There are many implementations of this game, including this one that I wrote in Python. And, of course, these implementations often have corresponding test suites, like this (incomplete) example.

Exercise: Play a quick game of tic-tac-toe by hand. If you can, find a partner, but if not, then play by yourself.

Notice what just happened. You played the game. In doing so, you ran your own mental implementation of the rules. The result you got was one of many possible games, each with its own specific sequence of legal moves, leading to a particular ending state. Maybe someone won, or maybe the game was a tie. Either way, many different games could have ended with that same board.

Modeling is different from programming. When you're programming traditionally, you give the computer a set of instructions and it follows them. This is true whether you're programming functionally or imperatively, with or without objects, etc. Declarative modeling languages like Forge work differently. The goal of a model isn't to run instructions, but rather to describe the rules that govern systems.

Here's a useful comparison to help reinforce the difference (with thanks to Daniel Jackson):

- An empty program does nothing.

- An empty model allows every behavior.

Modeling Tic-Tac-Toe Boards

What are the essential concepts in a game of tic-tac-toe?

When we're first writing a model, we'll start with 5 steps. For each step, I'll give examples from tic-tac-toe and also for binary search trees (which we'll start modeling soon) for contrast.

- What are the datatypes involved, and their fields?

- For tic-tac-toe: they might be the 3-by-3 board and the

XandOmarks that go in board locations. - For a binary search tree: they might be the tree nodes and their left and right children.

- For tic-tac-toe: they might be the 3-by-3 board and the

- What makes an instance of these datatypes well formed? That is, what conditions are needed for them to not be garbage?

- For tic-tac-toe, we might require that the indexes used are between

0and2, since the board is 3-by-3. (We could just as easily use1,2, and3. I picked0as the starting point out of habit, because list indexes start from0in the programming languages I tend to use.) - For a binary search tree, we might require that every node has at most one left child, at most one right child, a unique parent, and so on.

- For tic-tac-toe, we might require that the indexes used are between

- What's a small example of how these datatypes can be instantiated?

- For tic-tac-toe, the empty board would be an example. So would the board where

Xmoves first into the middle square. - For a binary search tree, this might be a tree with only one node, or a 3-node tree where the root's left and right children are leaves.

- For tic-tac-toe, the empty board would be an example. So would the board where

- What does the model look like when run?

- For tic-tac-toe, we should see a board with some number of

XandOmarks. - For a binary search tree, we should see some set of nodes that forms a single tree via left- and right-children.

- For tic-tac-toe, we should see a board with some number of

- What domain predicates are there? Well-formedness defines conditions that are needed for an instantiation to not be "garbage". But whatever we're modeling surely has domain-specific concepts of its own, which may or may not hold.

- For tic-tac-toe, we care a great deal if the board is a winning board or not. Similarly, we might care if it looks like someone has cheated.

- For a binary search tree, we care if the tree is balanced, or if it satisfies the BST invariant.

Why make this distinction between well-formedness and domain predicates? Because one should always hold in any instance Forge considers, but the other may or may not hold. In fact, we might want to use Forge to verify that a domain predicate always holds! And if we've told Forge that any instance that doesn't satisfy it is garbage, Forge won't find us such an instance.

Datatypes

We might list:

- the players

XandO; - the 3-by-3 game board, where players can put their marks;

- the idea of whose turn it is at any given time; and

- the idea of who has won the game at any given time.

Now let's add those ideas to a model in Forge!

#lang forge/froglet

The first line of any Forge model will be a #lang line, which says which Forge language the file uses. We'll start with the Froglet language for now. Everything you learn in this language will apply in other Forge languages, so I'll use "Forge" interchangeably.

Now we need a way to talk about the noughts and crosses themselves. So let's add a sig that represents them:

#lang forge/froglet

abstract sig Player {}

one sig X, O extends Player {}

You can think of sig in Forge as declaring a kind of object. A sig can extend another, in which case we say that it is a child of its parent, and child sigs cannot overlap. When a sig is abstract, any member must also be a member of one of that sig's children; in this case, any Player must either be X or O. Finally, a one sig has exactly one member—there's only a single X and O in our model.

We also need a way to represent the game board. We have a few options here: we could create an Index sig, and encode an ordering on those (something like "column A, then column B, then column C"). Another is to use Forge's integer support. Both solutions have their pros and cons. Let's use integers, in part to get some practice with them.

#lang forge/froglet

abstract sig Player {}

one sig X, O extends Player {}

sig Board {

board: pfunc Int -> Int -> Player

}

Every Board object contains a board field describing the moves made so far. This field is a partial function, or dictionary, for every Board that maps each (Int, Int) pair to at most one Player.

Well-formedness

These definitions sketch the overall shape of a board: players, marks on the board, and so on. But not all boards that fit the definition will be valid. For example:

- Forge integers aren't true mathematical integers, but are bounded by a bitwidth we give whenever we run the tool. So we need to be careful here. We want a classical 3-by-3 board with indexes of (say)

0,1, and2, not a board where (e.g.) row-5, column-1is a valid location.

We'll call these well-formedness constraints. They aren't innately enforced by our sig declarations, but we'll almost always want Forge to enforce them, so that it doesn't find "garbage instances". Let's write a wellformedness predicate:

-- a Board is well-formed if and only if:

pred wellformed[b: Board] {

-- row and column numbers used are between 0 and 2, inclusive

all row, col: Int | {

(row < 0 or row > 2 or col < 0 or col > 2)

implies no b.board[row][col]

}

}

Forge treats either -- or // as beginning a line-level comment, and /* ... */ as denoting a block comment. This is different from the Python code we saw in the last section! In Forge, # has a different meaning.

This predicate is true of any Board if and only if the above 2 constraints are satisfied. Let's break down the syntax:

- Constraints can quantify over a domain. E.g.,

all row, col: Int | ...says that for any pair of integers (up to the given bitwidth), the following condition (...) must hold. Forge also supports, e.g., existential quantification (some), but we don't need that yet. We also have access to standard boolean operators likeor,implies, etc. - Formulas in Forge always evaluate to a boolean; expressions evaluate to sets. For example,

- the expression

b.board[row][col]evaluates to thePlayer(if any) with a mark at location (row,col) in boardb; but - the formula

no b.board[row][col]is true if and only if there is no such `Player``.

- the expression

- A

pred(predicate) in Forge is a helper function that evaluates to a boolean. Thus, its body should always be a formula.

Notice that, rather than describing a process that produces a well-formed board, or even instructions to check well-formedness, we've just given a declarative description of what's necessary and sufficient for a board to be well-formed. If we'd left the predicate body empty, any board would be considered well-formed—there'd be no formulas to enforce!

A Few Examples

Since a predicate is just a function that returns true or false, depending on its arguments and whichever instance Forge is looking at, we can write tests for it the same way we would for any other boolean-valued function. But even if we're not testing, it can be useful to write a small number of examples, so we can build intuition for what the predicate means.

In Forge, examples are automatically run whenever your model executes. They describe basic intent about a given predicate; in this case, let's write two examples in Forge:

- A board where

Xhas moved 3 times in valid locations, and so ought to be considered well formed. - A board where a player has moved in an invalid location, and shouldn't be considered well formed.

Notice that we're not making judgements about the rules being obeyed yet—just about whether our wellformed predicate is behaving the way we expect. And the wellformed predicate isn't aware of things like "taking turns" or "stop after someone has won", etc. It just knows about the valid indexes being 0, 1, and 2.

We'll write those two examples in Forge:

-- Helper to make these examples easier to write

pred all_wellformed { all b: Board | wellformed[b]}

-- all_wellformed should be _true_ for the following instance

example firstRowX_wellformed is {all_wellformed} for {

Board = `Board0 -- backquote labels specific atoms

X = `X O = `O -- examples must define all sigs

Player = X + O -- only two kinds of player

`Board0.board = (0, 0) -> `X + -- the partial function for the board's

(0, 1) -> `X + -- contents (unmentioned squares must

(0, 2) -> `X -- remain empty, because we used "=" to say

-- "here's the function for `board0")

}

-- all_wellformed should be _false_ for the following instance

example off_board_not_wellformed is {not all_wellformed} for {

Board = `Board0

X = `X O = `O

Player = X + O

`Board0.board = (-1, 0) -> `X +

(0, 1) -> `X +

(0, 2) -> `X

}

Notice that we've got a test thats a positive example and another test that's a negative example. We want to make sure to exercise both cases, or else "always true" or "always" false could pass our suite.

Running Forge

The run command tells Forge to search for an instance satisfying the given constraints:

run { some b: Board | wellformed[b]}

(If you're curious about how Forge finds solutions, you can find a brief sketch in the Q&A for this chapter.)

When we click the play button in the VSCode extension, the engine solves the constraints and produces a satisfying instance, (Because of differences across solver versions, hardware, etc., it's possible you'll see a different instance than the one shown here.) A browser window should pop up with a visualization. You can also run racket <filename.frg> in the terminal, although we recommend the VSCode extension.

If you're running on Windows, the Windows-native cmd and PowerShell terminals will not properly load Forge's visualizer. Instead, we suggest using one of many other options on Windows that we've tested and know to work: the VSCode extension (available on the VSCode Marketplace), DrRacket, Git for Windows (e.g., git bash), Windows Subsystem for Linux, or Cygwin.

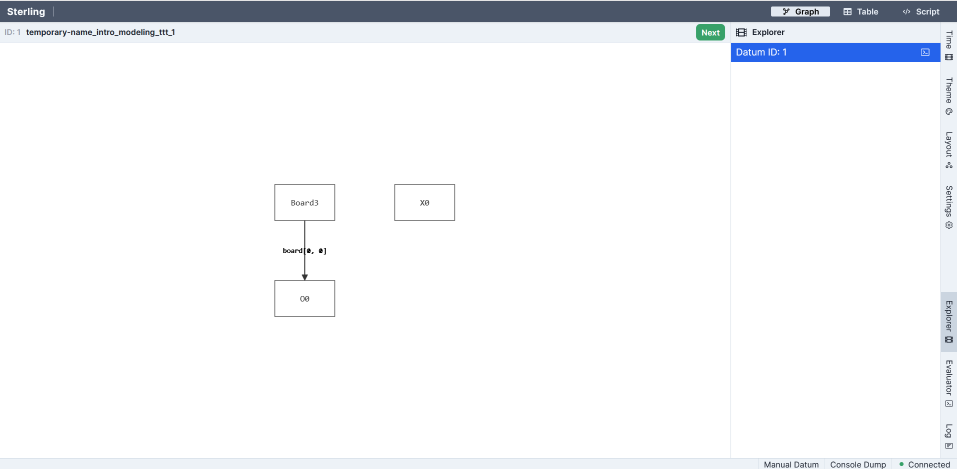

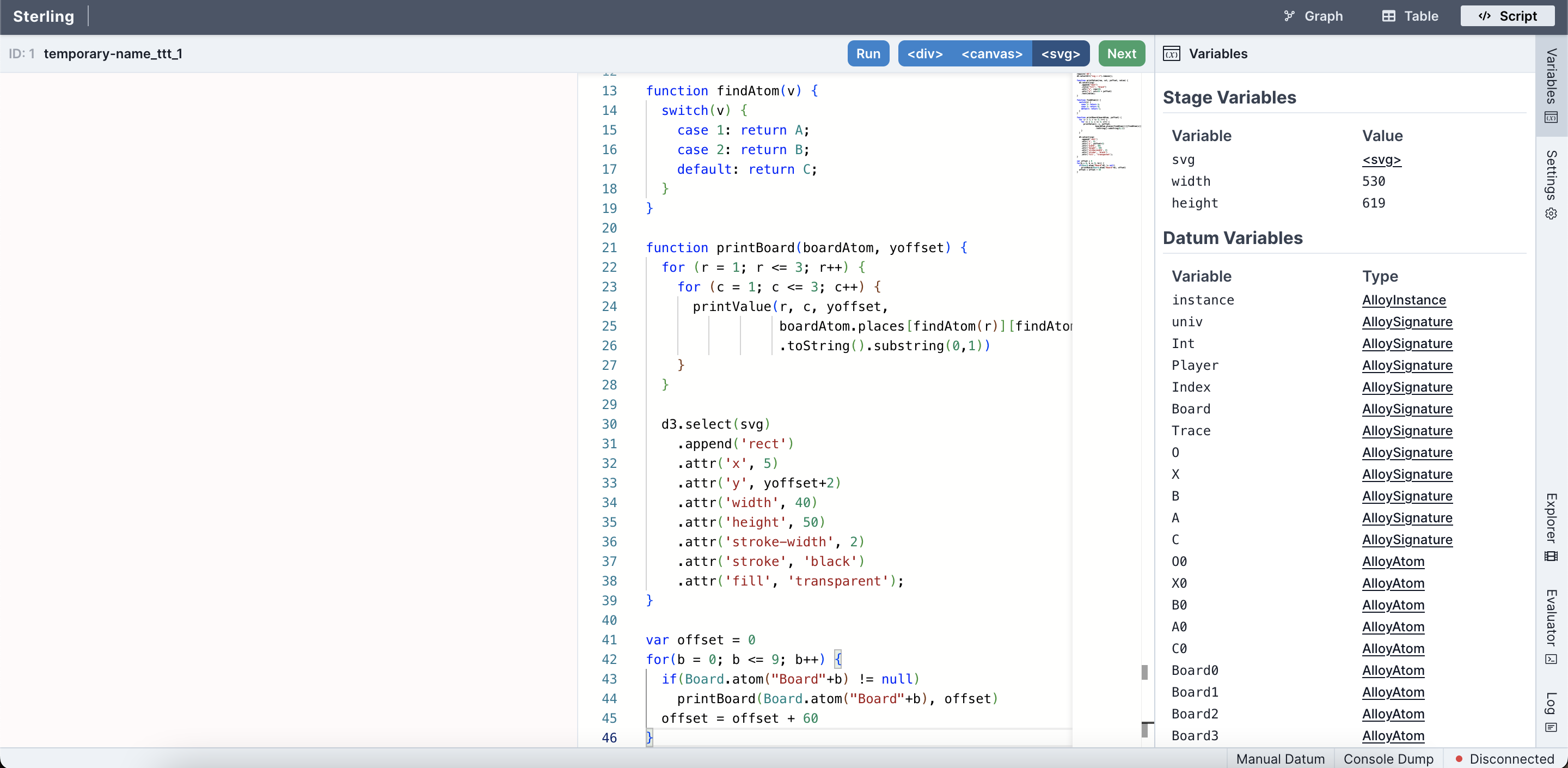

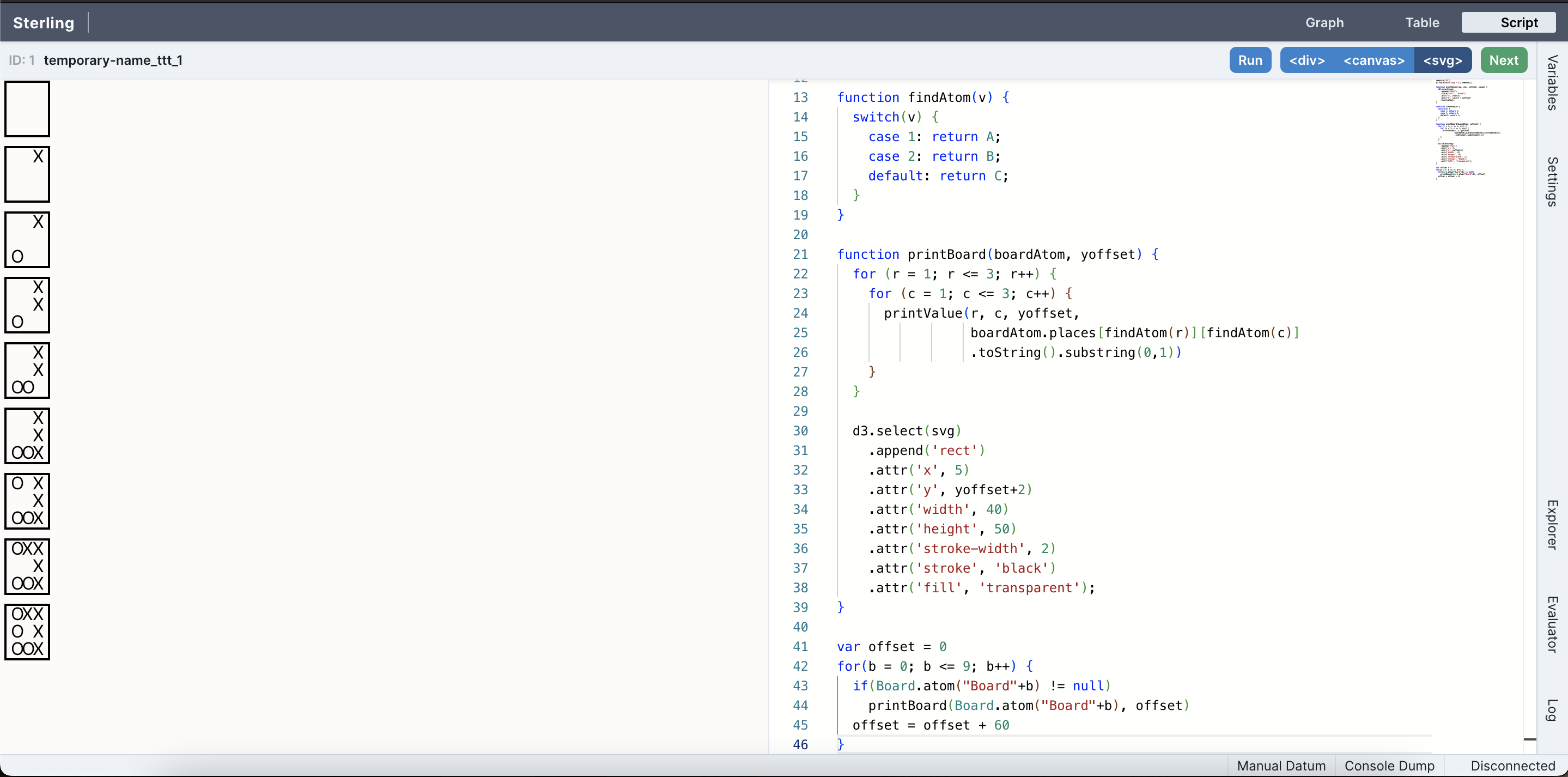

There are many options for visualization. The default which loads initially is a directed-graph based one:

(TODO: make this clickable to show it bigger? Want to see the whole window, but then the graph is small.)

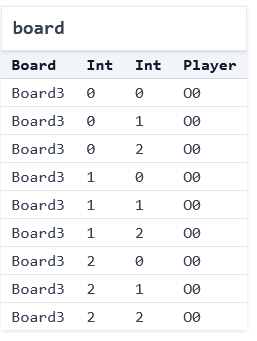

This isn't very useful; it looks nothing like a tic-tac-toe board! We can make more progress by using the "Table" visualization—which isn't ideal either:

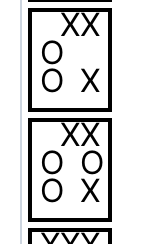

Forge also allows users to make custom visualizations via short JavaScript programs; here's an example basic visualizer for this specific tic-tac-toe model that produces images like this one:

We'll talk more about visualization scripts later. For now, let's proceed. TODO: replace img with one matching the table view TODO: add side-by-side CSS

This instance contains a single board, and it has 9 entries. Player O has moved in all of them (the 0 suffix of O0 in the display is an artifact of how Forge's engine works; ignore it for now). It's worth noticing two things:

- This board doesn't look quite right: player

Ooccupies all the squares. We might ask: has playerObeen cheating? But the fact is that this board satisfies the constraints we have written so far. Forge produces it simply because our model isn't yet restrictive enough, and for no other reason. "Cheating" doesn't exist yet. - We didn't say how to find that instance. We just said what we wanted, and the tool performed some kind of search to find it. So far the objects are simple, and the constraints basic, but hopefully the power of the idea is coming into focus.

Here, we see Board3 because the solver had a few options to pick from: we never said there should only ever be one Board, after all. So, under the hood, it was considering the potential existence of multiple boards. And then it happened to pick this one to exist in this instance.

Reflection: Implementation vs. Model

So far we've just modeled boards, not full games. But we can still contrast our work here against the Python implementation of tic-tac-toe shared above.

Exercise: How do the data-structure choices, and type declarations, in the implementation compare with the essence of the game as reflected in the model? What is shared, and what is different?

Spend a minute identifying at least one commonality and at least one difference, then move on.

Domain Predicates

Now let's write predicates that describe important ideas in the domain. What's important in the game of tic-tac-toe? Here are a few things.

Starting Boards

What would it mean to be a starting state in a game? The board is empty:

pred starting[s: Board] {

all row, col: Int |

no s.board[row][col]

}

Turns

How do we tell when it's a given player's turn? It's X's turn when there are the same number of each mark on the board:

pred XTurn[s: Board] {

#{row, col: Int | s.board[row][col] = X} =

#{row, col: Int | s.board[row][col] = O}

}

Here, we're measuring the size of 2 sets. The {row, col: Int | ...} syntax is called a set comprehension. A set comprehension defines a set. We're defining the set of row-column pairs where the board contains one of the player marks. The # operator gives the size of these sets, which we then compare.

Exercise: Is it enough to say that OTurn is the negation of XTurn? That is, we could write: pred OTurn[s: Board] { not XTurn[s: Board]}. This seems reasonable enough; why might we not want to write this?

Think, then click!

Because we defined X's turn to be when the number of X's and O's on the board are in balance. So any other board would be O's turn, including ones that ought to be illegal, once we start defining moves of the game. Instead, let's say something like this:

pred OTurn[s: Board] {

-- It's O's turn if X has moved once more often than O has

#{row, col: Int | s.board[row][col] = X} =

add[#{row, col: Int | s.board[row][col] = O}, 1]

}

Forge supports arithmetic operations on integers like add. Forge integers are signed (i.e., can be positive or negative) and are bounded by a bit width, which defaults to 4 bits. The number of available integers is always $2^k$, where $k$ is the bit width.

Forge follows the 2's complement arithmetic convention, which means that the available integers are split evenly between positive and negative numbers, but counting 0 as "positive". So with 4 bits, we can represent numbers between -8 and 7 (inclusive).

This means that (while it doesn't matter for this model yet), arithmetic operations can overflow—just like primitive integers in languages like Java! For example, if we're working with 4-bit integers, then add[7,1] will be -8. You can experiment with this in the visualizer's evaluator, which we'll be using a lot after the initial modeling tour is done.

Don't try to use + for addition in any Forge language. Use add instead; this is because + is reserved for something else (which we'll explain later).

Winning the Game

What does it mean to win? A player has won on a given board if:

- they have placed their mark in all 3 columns of a row;

- they have placed their mark in all 3 rows of a column; or

- they have placed their mark in all 3 squares of a diagonal.

We'll express this in a winner predicate that takes the current board and a player name. Let's also define a couple helper predicates along the way:

pred winRow[s: Board, p: Player] {

-- note we cannot use `all` here because there are more Ints

some row: Int | {

s.board[row][0] = p

s.board[row][1] = p

s.board[row][2] = p

}

}

pred winCol[s: Board, p: Player] {

some column: Int | {

s.board[0][column] = p

s.board[1][column] = p

s.board[2][column] = p

}

}

pred winner[s: Board, p: Player] {

winRow[s, p]

or

winCol[s, p]

or

{

s.board[0][0] = p

s.board[1][1] = p

s.board[2][2] = p

} or {

s.board[0][2] = p

s.board[1][1] = p

s.board[2][0] = p

}

}

After writing these domain predicates, we're reaching a fairly complete model for a single tic-tac-toe board. Let's decide how to fix the issue we saw above (the reason why OTurn couldn't be the negation of XTurn): perhaps a player has moved too often.

Should we add something like OTurn[s] or XTurn[s] to our wellformedness predicate? No! If we then later enforced wellformedness for all boards, that would exclude "cheating" instances where a player has more moves on the board than are allowed. But this has some risk, depending on how we intend to use the wellformed predicate:

- If we were only ever generating valid boards, a cheating state might well be spurious, or at least undesirable. In that case, we might prevent such states in

wellformedand rule it out. - If we were generating arbitrary (not necessarily valid) boards, being able to see a cheating state might be useful. In that case, we'd leave it out of

wellformed. - If we're interested in verification, e.g., we are asking whether the game of Tic-Tac-Toe enables ever reaching a cheating board, we shouldn't add

not cheatingtowellformed; becausewellformedalso excludes garbage boards, we'd probably use it in our verification—in which case, Forge will never find us a counterexample!

Notice the similarity between this issue and what we do in property-based testing. Here, we're forced to distinguish between what a reasonable board is (analogous to the generator's output in PBT) and what a reasonable behavior is (analogous to the validity predicate in PBT). One narrows the scope of possible worlds to avoid true "garbage"; the other checks whether the system behaves as expected in one of those worlds.

We'll come back to this later, when we've had a bit more modeling experience. For now, let's separate our goal into a new predicate called balanced, and add it to our run command above so that Forge will find us an instance where some board is both balanced and wellformed:

pred balanced[s: Board] {

XTurn[s] or OTurn[s]

}

run { some b: Board | wellformed[b] and balanced[b]}

If we click the "Next" button a few times, we see that not all is well: we're getting boards where wellformed is violated (e.g., entries at negative rows, or multiple moves in one square). Why is this happening?

We're getting this because of how the run was phrased. We said to find an instance where some board was well-formed and valid, not one where all boards were. Our run is satisfied by any instance where at least one Board is wellformed; the others won't affect the truth of the constraint. By default, Forge will find instances with up to 4 Boards. So we can fix the problem either by telling Forge to find instances with only 1 Board:

run { some b: Board | wellformed[b] and balanced[b]}

for exactly 1 Board

or by saying that all boards must be well-formed and balanced:

run { all b: Board | wellformed[b] and balanced[b]}

Practice with run

The run command can be used to give Forge more detailed instructions for its search.

No Boards

Exercise: Is it possible for an instance with no boards to still satisfy constraints like these?

run {

all b: Board | {

-- X has won, and the board looks OK

wellformed[b]

winner[b, X]

balanced[b]

}

}

Think, then click!

Yes! There aren't any boards, so there's no obligation for anything to satisfy the constraints inside the quantifier. You can think of the all as something like a for loop in Java or the all() function in Python: it checks every Board in the instance. If there aren't any, there's nothing to check—return true.

Adding More

This addition also requires that X moved in the middle of the board:

run {

all b: Board | {

-- X has won, and the board looks OK

wellformed[b]

winner[b, X]

balanced[b]

-- X started in the middle

b.board[1][1] = X

}

} for exactly 2 Board

Notice that, because we said exactly 2 Board here, Forge must find instances containing 2 tic-tac-toe boards, and both of them must satisfy the constraints: wellformedness, X moving in the middle, etc. You could ask for a board where X hasn't won by adding not winner[b, X].

We'll come back to tic-tac-toe soon; for now, let's cover another static example.

Intro to Modeling Systems (Part 2: BSTs)

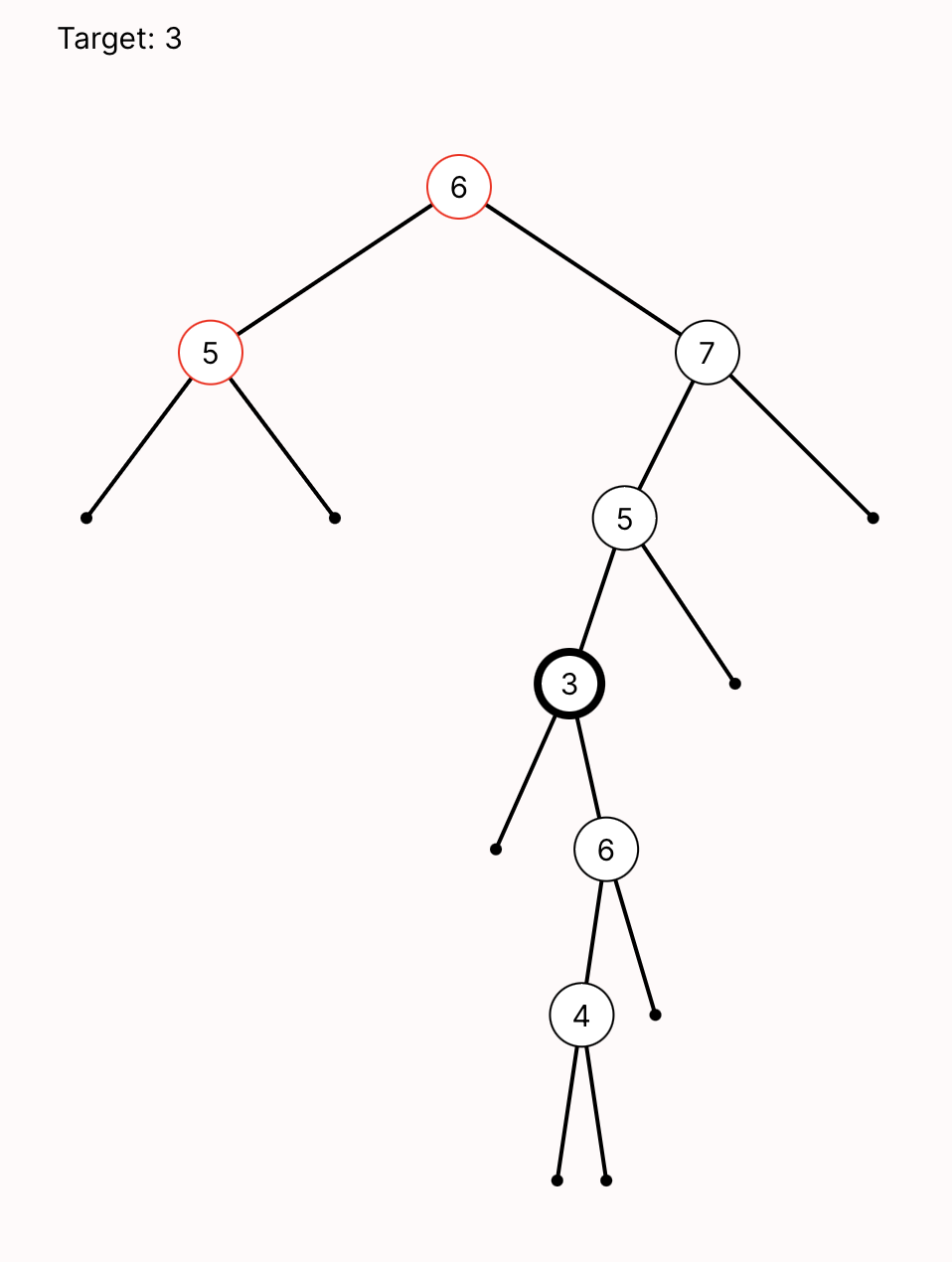

Now that we've written our first model—tic-tac-toe boards—let's switch to something a bit more serious: binary search trees. A binary search tree (BST) is a binary tree with an added property about its structure that allows it to efficiently answer many search queries related to the values it stores. Here's an example, drawn by hand:

Each node of the tree holds some value that the tree supports searching for. We'll call this value the search key, or just the key for each node. The common ancestor of every node in the tree is called the root.

This is obviously a binary tree, since it is a tree where every node has at most 2 children. What makes it a binary search tree is the invariant that every node obeys:

- all left-descendants of have a key less than 's key; and

- all right-descendants of have a key greater than or equal to 's key.

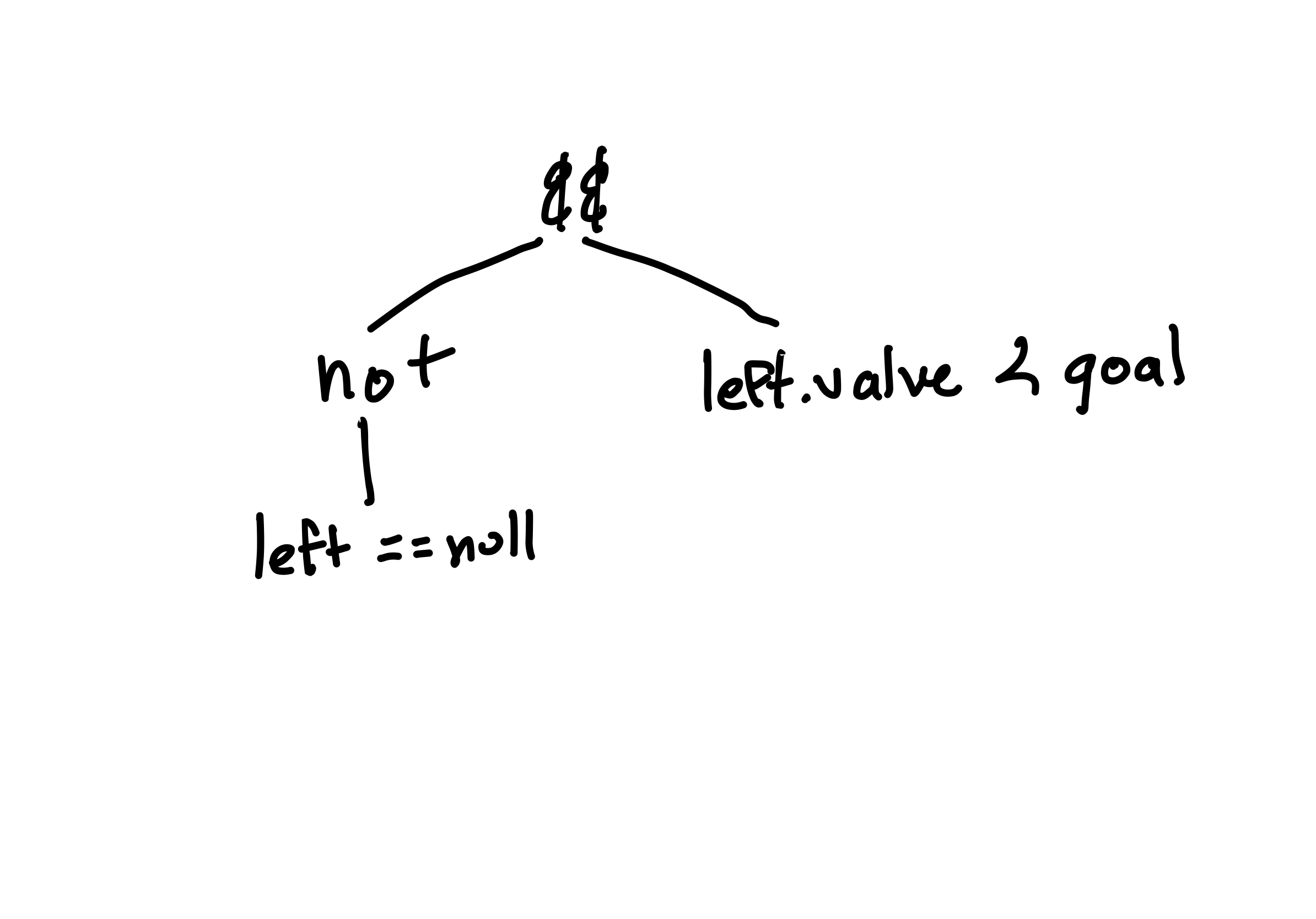

When you're first learning about binary search trees, it's easy to phrase the invariant wrong:

- the left child of (if any) has a key less than 's key; and

- the right child of (if any) has a key greater than or equal to 's key. With experience, it's straightforward to see that this is too weak; search will break. But at first that isn't so obvious. It would be interesting if we could use Forge to help us understand the difference and its impact on searching the tree.

Let's start modeling. As with programming, it's a good idea to start simple, and add complexity and optimization after. So we'll start with plain binary trees, and then add the invariant.

Like with tic-tac-toe, we'll follow this rough 5-step progression:

- define the pertinent datatypes and fields;

- define a well-formedness predicate;

- write some examples;

- run and exercise the base model;

- write domain predicates. Keep in mind that this isn't a strict "waterfall" style progression; we may return to previous steps if we discover it's necessary.

Datatypes

A binary tree is made up of nodes. Each node in the tree has at most one left child and at most one right child. While nodes in the tree can hold values of most any type, for simplicity we'll stick to integers.

Unlike in tic-tac-toe, this definition is recursive:

#lang forge/froglet

sig Node {

key: one Int, -- every node has some key

left: lone Node, -- every node has at most one left-child

right: lone Node -- every node has at most one right-child

}

Recall that a sig is a datatype, each of which may have a set of fields. Here, we're saying that there is a datatype called Node, and that every Node has a key, left, and right field.

Wellformedness for Binary Trees

What makes a binary tree a binary tree? We might start by saying that:

- it's single-tree-shaped: there are no cycles and all nodes have at most one parent node; and

- it's connected: all non-root nodes have a common ancestor.

It's sometimes useful to write domain predicates early, and then use them to define wellformedness more clearly. For example, it might be useful to write a helper that describes what it means for a node to be a root node, i.e., the common ancestor of every node in the tree:

#lang forge/froglet

sig Node {

key: one Int, -- every node has some key

left: lone Node, -- every node has at most one left-child

right: lone Node -- every node has at most one right-child

}

pred isRoot[n: Node] {

-- a node is a root if it has no ancestor

no n2: Node | n = n2.left or n = n2.right

}

Then we'll use the isRoot helper in our wellformed predicate. But to write this predicate, there's a new challenge. We'll need to express constraints like "no node can reach itself via left or right fields". So far we've only spoken of a node's immediate left or right child. Instead, we now need a way to talk about reachability over any number of left or right fields. Forge provides a helper, reachable, that makes this straightforward.

The built-in reachable predicate returns true if and only if its first argument is reachable from its second argument, via all of the remaining arguments. Thus, reachable[n1, anc, left, right] means: "anc can reach n1 via some sequence of left and right fields."

For reasons we'll explore later, reachable can be subtle; if you're curious now, see the Static Models Q&A for a discussion of this.

Using reachable, we can now write:

pred wellformed {

-- no cycles: no node can reach itself via a succession of left and right fields

all n: Node | not reachable[n, n, left, right]

-- all non-root nodes have a common ancestor from which both are reachable

-- the "disj" keyword means that n1 and n2 must be _different_

all disj n1, n2: Node | (not isRoot[n1] and not isRoot[n2]) implies {

some anc: Node | reachable[n1, anc, left, right] and

reachable[n2, anc, left, right] }

-- nodes have a unique parent (if any)

all disj n1, n2, n3: Node |

not ((n1.left = n3 or n1.right = n3) and (n2.left = n3 or n2.right = n3))

}

Write an example or two

Let's write a few examples of well-formed and non-well-formed trees. I've listed some possibilities below.

Just like with testing a program, it's not always immediately clear when to stop testing a model. Fortunately, Forge gives us the ability to explore and exercise the model more thoroughly than just running a program does. So, while we're not completely out of danger, we do have new tools to protect ourselves with.

Positive examples

A binary tree with no nodes should be considered well-formed.

example p_no_nodes is wellformed for {

no Node -- there are no nodes in the tree; it is empty

}

Drawing this one wouldn't be very interesting.

A binary tree with a single node should be considered well-formed.

example p_one_nodes is wellformed for {

Node = `Node0 -- there is exactly one node in the tree, named "Node0".

no left -- there are no left-children

no right -- there are no right-children

}

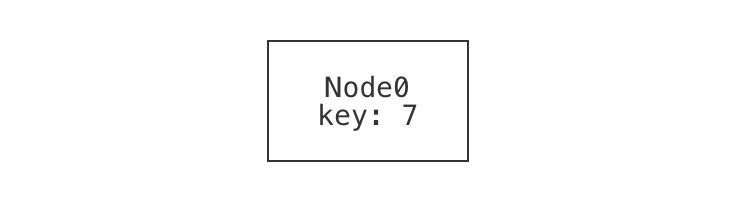

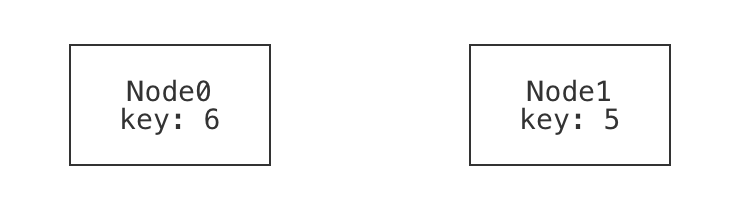

If we were going to draw the single-node example, we might draw it something like this:

In fact, this is what Forge's default visualizer can generate. Notice that the node has:

- a name or identity, which we supplied when we named it

Node0in the example; and - a value for its

keyfield, which we did not supply (and so Forge filled in). Be careful not to confuse these! There's a rough analogy to programming: it's very possible that (especially if we have a buggy program or model) there might be different nodes with the same key value.

(TODO: decide: discussion of partial vs. total examples goes where?)

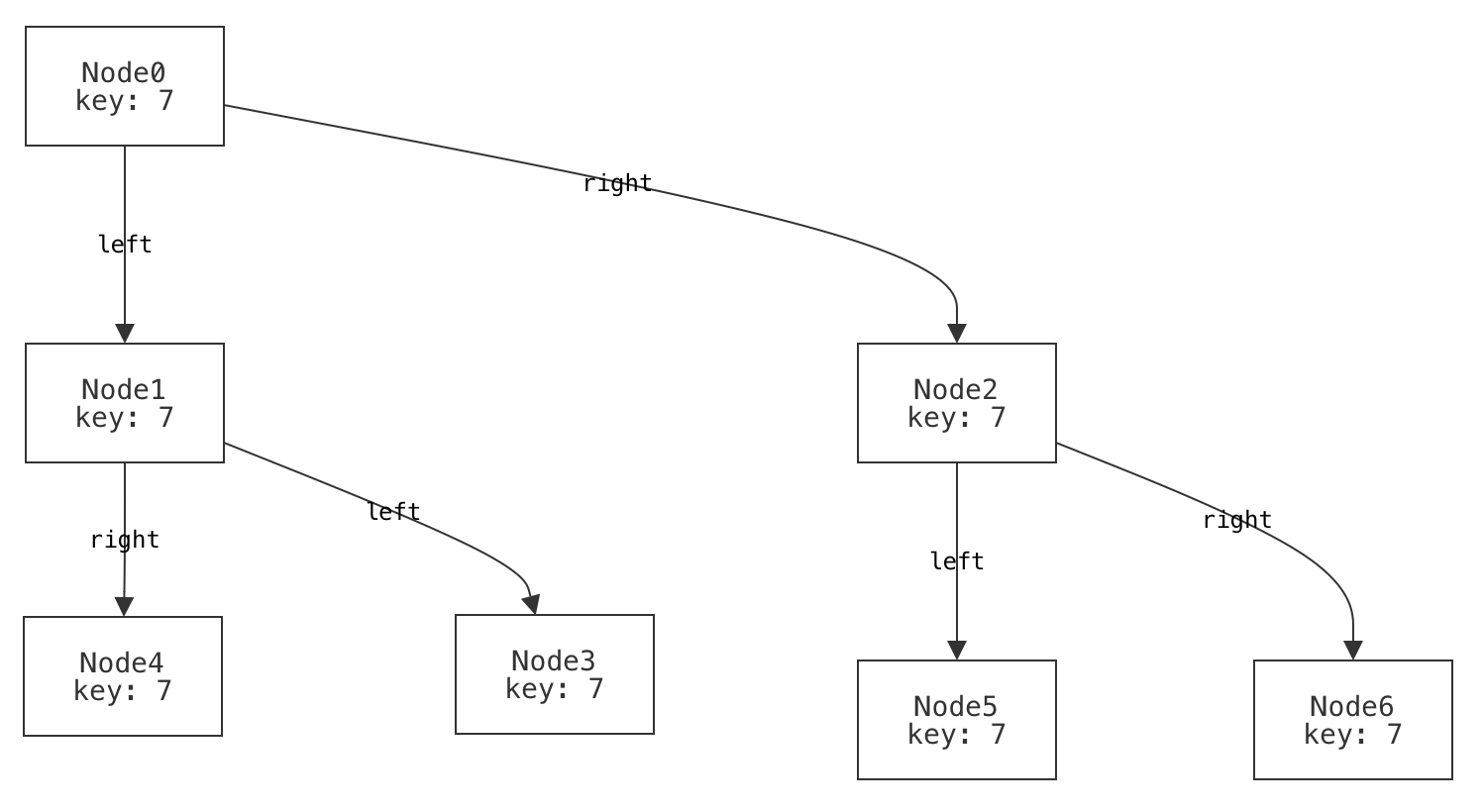

A binary tree with more than one row should be considered well-formed.

example p_multi_row is wellformed for {

Node = `Node0 + -- row 0

`Node1 + `Node2 + -- row 1

`Node3 + `Node4 + `Node5 + `Node6 -- row 2

-- Define the child relationships (and lack thereof, for leaves)

-- This is a bit verbose; we'll learn more concise syntax for this soon!

`Node0.left = `Node1

`Node0.right = `Node2

`Node1.left = `Node3

`Node1.right = `Node4

`Node2.left = `Node5

`Node2.right = `Node6

no `Node3.left no `Node3.right

no `Node4.left no `Node4.right

no `Node5.left no `Node5.right

no `Node6.left no `Node6.right

}

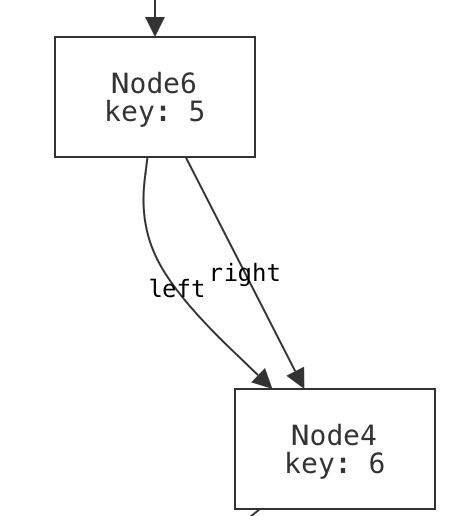

Wait a moment; there's something strange here. What do you notice about the way we've visualized this tree?

Think, then click!

That visualization is not how we'd choose to draw the tree: it has the left field to the right and the right field to the left! This is because we used Forge's default visualizer. By default, Forge has no way to understand what "left" and "right" mean. We'll come back to this problem soon.

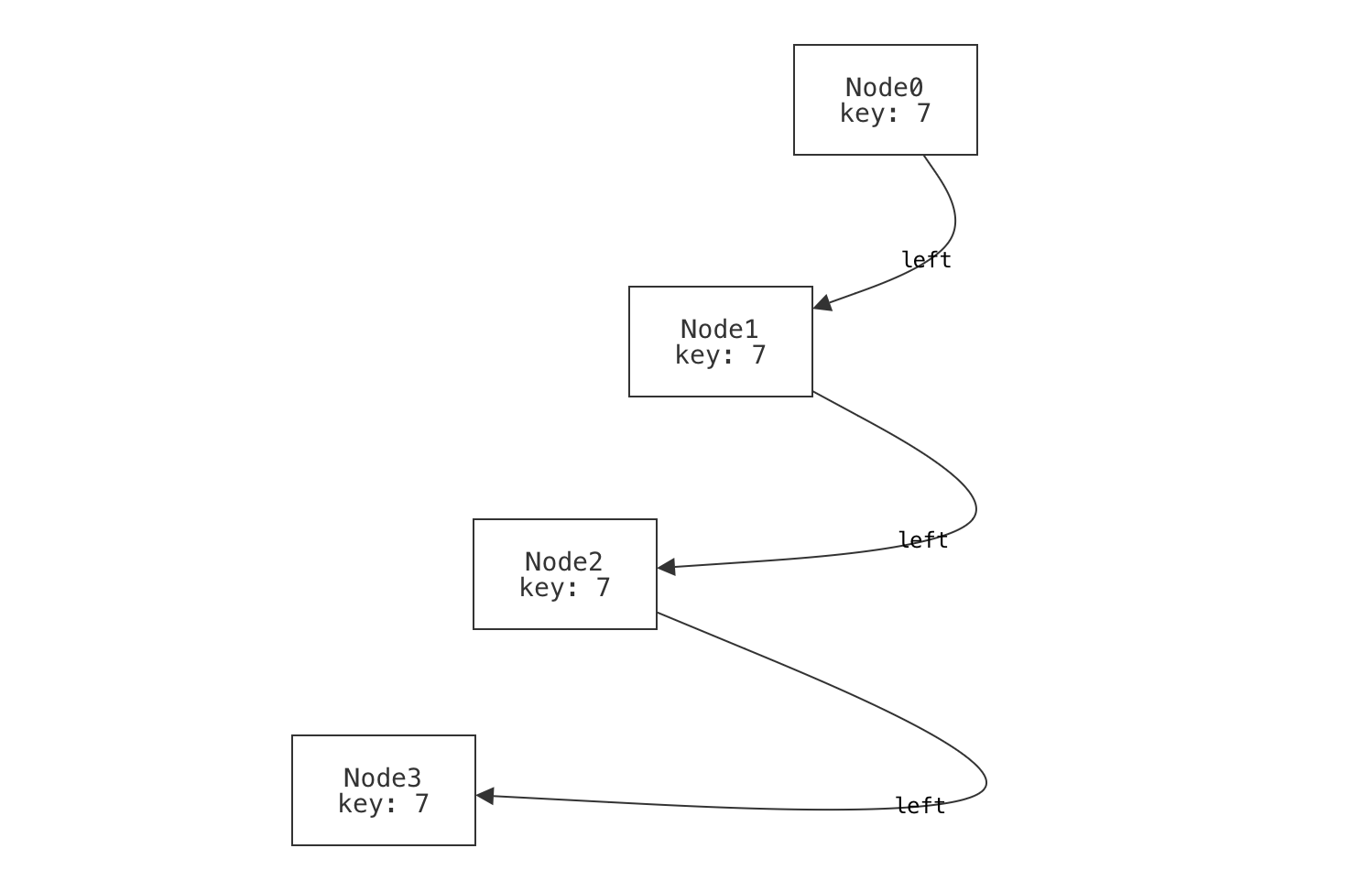

An unbalanced binary tree is still well-formed.

When we draw binary trees, often we draw them in a balanced way: nice and "bushy", with roughly even distribution of nodes to the left and right. But an unbalanced tree is still a tree, and we should make sure it counts as one.

example p_unbalanced_chain is wellformed for {

Node = `Node0 + `Node1 + `Node2 + `Node3

-- Form a long chain; it is still a binary tree.

`Node0.left = `Node1

no `Node0.right

`Node1.left = `Node2

no `Node1.right

`Node2.left = `Node3

no `Node2.right

no `Node3.left no `Node3.right

}

Negative examples

It's best to write some positive and negative examples. Why? Well, suppose you needed to test a method or function that returned a boolean, like checking whether an integer is even. Here's an example in Python:

def is_even(x: int) -> bool: return x % 2 == 0

What's wrong with this test suite?

assert is_even(0) == True

assert is_even(2) == True

assert is_even(10000) == True

assert is_even(-10000) == True

The problem isn't only the size of the suite! By testing only values for which we expect True to be returned, we're neglecting half the problem. We'd never catch buggy implementations like this one:

def is_even(x: int) -> bool: return True

Forge predicates are very like boolean-valued functions, so it's important to exercise them in both directions. Here are some negative examples:

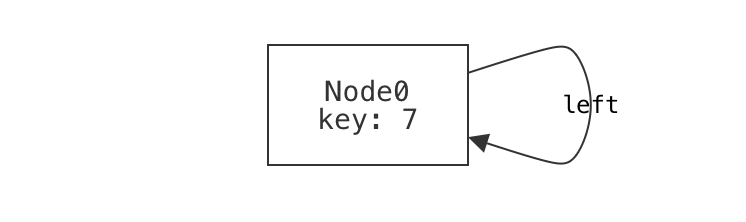

A single node that is its own left-child is not well-formed.

example n_own_left is {not wellformed} for {

Node = `Node0

`Node0.left = `Node0

no `Node0.right

}

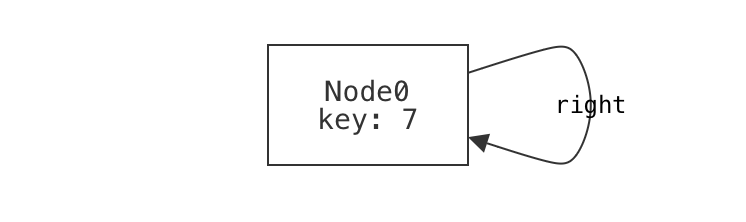

A single node that is its own right-child is not well-formed.

example n_own_right is {not wellformed} for {

Node = `Node0

no `Node0.left

`Node0.right = `Node0

}

A single node that's reachable via a longer cycle using both left- and right-children is not well-formed.

example n_mixed_cycle is {not wellformed} for {

Node = `Node0 + `Node1 + `Node2

`Node0.left = `Node1

no `Node0.right

no `Node1.left

`Node1.right = `Node2

`Node2.left = `Node0

no `Node2.right

}

A "forest" of multiple, disconnected trees is not well-formed.

example n_forest is {not wellformed} for {

Node = `Node0 + `Node1

no `Node0.left

no `Node0.right

no `Node1.left

no `Node1.right

}

We wouldn't normally be able to check these examples until we'd finished writing the wellformed predicate, but it can still be useful to create a few examples first, to help guide the constraints you write. Binary trees are a quite simple domain; imagine modeling something like the Java class system. Things can get tricky fast, and it's good to have a few concrete cases in mind.

Run the examples

Click the run button, and Forge will check that all of the examples satisfy (or dissatisfy) the wellformed predicate. One fails, but why? Notice two things:

- The failing example is a negative one. We expected this instance to be ruled out by

wellformed, but it was not. This points to a potential under-constraint bug inwellformed. - We intended the example to fail because it contains separate, disconnected trees. This gives us a hint about the nature of the missing constraint. Except...

We already added a constraint that forces connectivity. Didn't we?

all disj n1, n2: Node | (not isRoot[n1] and not isRoot[n2]) implies {